On human intuition, codifying knowledge and resilience

Coming out of Dave Snowden's 2 day workshop on Complexity-Based Design Thinking at DDDEurope 2019, it's easy to get many thoughts spinning in your head, and it definitely takes time to reflect on the dense knowlegde taken in over just 2 days.

One thing that stuck with me, which has left me reflecting a lot, was how allowing humans to make decision and use their intiution increases the resilience of a system. And then how codifying processes into explicit process steps, limits humans' ability to apply their experience and intuition, since they have to follow these pre-defined steps.

When I say "codifying processes into explicit steps" I mean having to make a process that is handled by a person making a decision by using their knowledge and experience into an explicit process. The explicit process could for example be having to create documentation to explain how the process works (and therefore having to explain how they make the decisions). Or it could be creating software to automate the decisions (again, therefore having to explain how they make the decisions). Have you ever tried explaining your rationale for making a decision in a complex problem to someone? Most likely it in some way involved trusting your intution (or "gut feeling"). Or maybe you simplified the problem into one that was easier for you to solve.

That's not to say that making processes explicit is necessarily bad. There's many advantages to doing this - usually following a pre-defined process will be faster and more efficient, and will also make it easier to distribute the process, as new people will be able to understand the process easier than having to learn from someone else's intuition and experience. It will of course also make processes more predictable, and predictability is often good.

However, I think we usually don't have a consious knowledge of the consequences of codifying human decision-making into explicit processes. Primarily, it means that there's little room for handling similar problems, that might be misinterprited to be the same problem, and therefore not actually fit into the process. This is where humans will apply their experience and intuition. The other problem is that it's hard to keep our explicit processes up to date as the world around us changes. This is where humans' will learn, and adjust their experience and intution. If we're lucky (or good), we might detect the weak signals and be able to re-adjust. If we're unlucky (or complacement), we might experience a collapse into chaos.

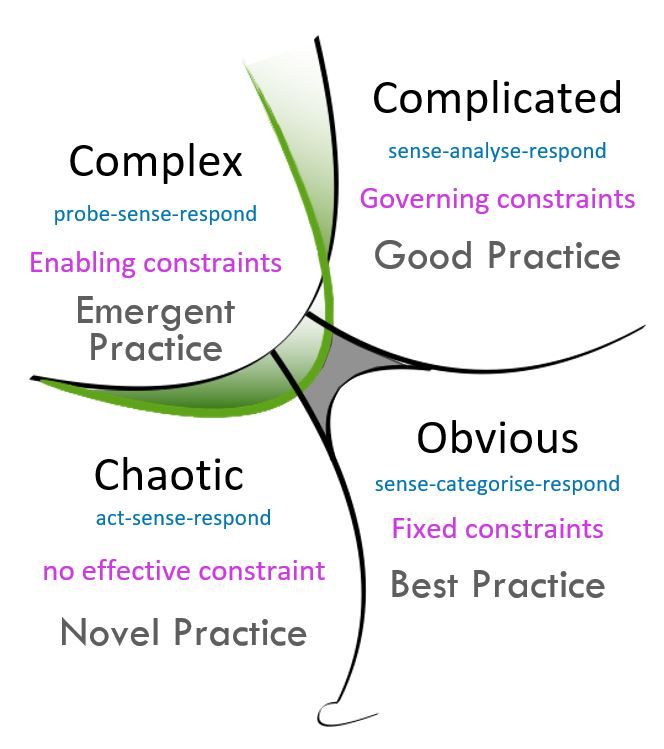

At this point, I think it can be good to look at the Cynefin Framework.

The obvious domain is, obviously, a place where explicit processes (like automation) is a good fit. Here the manual solution will often follow something like a checklist, or in some way already be codified. If not, it should at least be easy to explain, and follow very specific rules.

As we move into the complicated domain, it will be harder to codify the knowledge and thought processes that happens when decisions are made. While it might still be possible to fully automate the processes, it will require a deep insight from domain experts, and we should probably consider applying DDD principles to gain insight into the domain. We'll probably also notice experts using more heuristics and less rules, but both will usually be applied here.

As we move towards the complex domain, it is likely that decisions are made fully by applying heuristics, not by using rules, and fully automating processes will be very hard, if not outright impossible (and probably very risky, since mistakes might be frequent and costly). At this point it's extremely important to realise the cost of trying to remove human intution from decision-making. We can (and should!) still create tools to help humans get the information they need as fast as possible, but fully automating the process is probably not a good idea. We can also design for human intervention by trying to identify the cases where our automatic processes do not fit, and bring these to human decision-makers for manual handling.

I chose the post's preview picture as I find rubber bands an interesting image of one kind of resiliency. It is resilient to change, but once it snaps, the failure could be described as "catastrophic", as it suddenly snaps